Question # 1

| Which event processing pipeline contains the regex replacement processor that would be

called upon to run event masking routines on events as they are ingested? | | A. Merging pipeline

| | B. Indexing pipeline

| | C. Typing pipeline

| | D. Parsing pipeline |

D. Parsing pipeline

Explanation: The parsing pipeline contains the regex replacement processor that would be

called upon to run event masking routines on events as they are ingested. Event masking

is a process of replacing sensitive data in events with a placeholder value, such as

“XXXXX”. This is done by using the SEDCMD attribute in props.conf, which specifies a

regular expression to apply to the raw data of an event. The regex replacement processor is responsible for executing the SEDCMD attribute on the events before they are indexed.

Question # 2

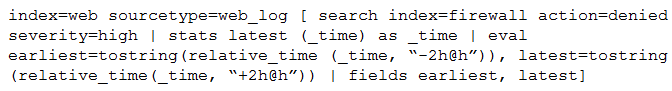

Consider the search shown below.

What is this search’s intended function? | | A. To return all the web_log events from the web index that occur two hours before and

after the most recent high severity, denied event found in the firewall index. | | B. To find all the denied, high severity events in the firewall index, and use those events to

further search for lateral movement within the web index. | | C. To return all the web_log events from the web index that occur two hours before and

after all high severity, denied events found in the firewall index. | | D. To search the firewall index for web logs that have been denied and are of high severity. |

C. To return all the web_log events from the web index that occur two hours before and

after all high severity, denied events found in the firewall index.

Question # 3

| Which of the following is the most efficient search? | | A. Option A | | B. Option B | | C. Option C | | D. Option D |

A. Option A

Explanation: Option A is the most efficient search because it uses the tstats command,

which is a fast and scalable way to search indexed fields in the _internal index. The tstats

command does not need to retrieve the raw events from the index, but instead uses the

tsidx files that store the metadata and summary information about the events. The tstats

command also supports distributed search and can run on multiple indexers in parallel.

Option B is less efficient because it uses the stats command, which requires retrieving the

raw events from the index and performing calculations on them. Option C is less efficient

because it does not specify the index or source type, which means it will search all the data

on the instance. Option D is less efficient because it uses the search command, which is

redundant and slows down the search performance.

Question # 4

| A customer has a search cluster (SHC) of six members split evenly between two data

centers (DC). The customer is concerned with network connectivity between the two DCs

due to frequent outages. Which of the following is true as it relates to SHC resiliency when

a network outage occurs between the two DCs? | | A. The SHC will function as expected as the SHC deployer will become the new captain

until the network communication is restored. | | B. The SHC will stop all scheduled search activity within the SHC.

| | C. The SHC will function as expected as the minimum required number of nodes for a SHC

is 3.

| | D. The SHC will function as expected as the SHC captain will fall back to previous active

captain in the remaining site. |

C. The SHC will function as expected as the minimum required number of nodes for a SHC

is 3.

Explanation: The SHC will function as expected as the minimum required number of

nodes for a SHC is 3. This is because the SHC uses a quorum-based algorithm to

determine the cluster state and elect the captain. A quorum is a majority of cluster

members that can communicate with each other. As long as a quorum exists, the cluster

can continue to operate normally and serve search requests. If a network outage occurs

between the two data centers, each data center will have three SHC members, but only

one of them will have a quorum. The data center with the quorum will elect a new captain if

the previous one was in the other data center, and the other data center will lose its cluster

status and stop serving searches until the network communication is restored.

The other options are incorrect because they do not reflect what happens when a network

outage occurs between two data centers with a SHC. Option A is incorrect because the

SHC deployer will not become the new captain, as it is not part of the SHC and does not

participate in cluster activities. Option B is incorrect because the SHC will not stop all

scheduled search activity, as it will still run scheduled searches on the data center with the

quorum. Option D is incorrect because the SHC captain will not fall back to previous active

captain in the remaining site, as it will be elected by the quorum based on several factors,

such as load, availability, and priority.

Question # 5

| A customer is having issues with truncated events greater than 64K. What configuration

should be deployed to a universal forwarder (UF) to fix the issue? | | A. None. Splunk default configurations will process the events as needed; the UF is not

causing truncation. | | B. Configure the best practice magic 6 or great 8 props.conf settings. | | C. EVENT_BREAKER_ENABLE and EVENT_BREAKER regular expression settings per

sourcetype. | | D. Global EVENT_BREAKER_ENABLE and EVENT_BREAKER regular expression

settings. |

B. Configure the best practice magic 6 or great 8 props.conf settings.

Explanation: The universal forwarder (UF) can cause truncation of events greater than

64K if it does not have the proper props.conf settings. The best practice magic 6 or great 8

props.conf settings are a set of attributes that control how the UF handles event breaking,

line merging, timestamp extraction, and host extraction. These settings ensure that the UF

preserves the integrity of the events and does not truncate or break them incorrectly.

Therefore, the correct answer is B, configure the best practice magic 6 or great 8

props.conf settings.

Question # 6

| A customer has 30 indexers in an indexer cluster configuration and two search heads.

They are working on writing SPL search for a particular use-case, but are concerned that it

takes too long to run for short time durations.

How can the Search Job Inspector capabilities be used to help validate and understand the

customer concerns? | | A. Search Job Inspector provides statistics to show how much time and the number of

events each indexer has processed. | | B. Search Job Inspector provides a Search Health Check capability that provides an

optimized SPL query the customer should try instead. | | C. Search Job Inspector cannot be used to help troubleshoot the slow performing search;

customer should review index=_introspection instead. | | D. The customer is using the transaction SPL search command, which is known to be slow. |

A. Search Job Inspector provides statistics to show how much time and the number of

events each indexer has processed.

Explanation: Search Job Inspector provides statistics to show how much time and the

number of events each indexer has processed. This can help validate and understand the

customer’s concerns about the search performance and identify any bottlenecks or issues

with the indexer cluster configuration. For example, the Search Job Inspector can show if

some indexers are overloaded or underutilized, if there are network latency or bandwidth

problems, or if there are errors or warnings during the search execution. The Search Job

Inspector can also show how much time each search command takes and how many

events are processed by each command.

Question # 7

| A customer has a network device that transmits logs directly with UDP or TCP over SSL.

Using PS best practices, which ingestion method should be used? | | A. Open a TCP port with SSL on a heavy forwarder to parse and transmit the data to the

indexing tier.

| | B. Open a UDP port on a universal forwarder to parse and transmit the data to the indexing

tier.

| | C. Use a syslog server to aggregate the data to files and use a heavy forwarder to read

and transmit the data to the indexing tier.

| | D. Use a syslog server to aggregate the data to files and use a universal forwarder to read

and transmit the data to the indexing tier. |

C. Use a syslog server to aggregate the data to files and use a heavy forwarder to read

and transmit the data to the indexing tier.

Explanation: The best practice for ingesting data from a network device that transmits logs

directly with UDP or TCP over SSL is to use a syslog server to aggregate the data to files

and use a heavy forwarder to read and transmit the data to the indexing tier. This method

has several advantages, such as:

-

It reduces the load on the network device by sending the data to a dedicated

syslog server.

-

It provides a reliable and secure transport of data by using TCP over SSL between

the syslog server and the heavy forwarder.

-

It allows the heavy forwarder to parse and enrich the data before sending it to the

indexing tier.

-

It preserves the original timestamp and host information of the data by using the

syslog-ng or Splunk Connect for Syslog solutions.

Therefore, the correct answer is C, use a syslog server to aggregate the data to files and

use a heavy forwarder to read and transmit the data to the indexing tier.

Splunk SPLK-3003 Exam Dumps

5 out of 5

Pass Your Splunk Core Certified Consultant Exam in First Attempt With SPLK-3003 Exam Dumps. Real Splunk Core Certified Consultant Exam Questions As in Actual Exam!

— 85 Questions With Valid Answers

— Updation Date : 15-Apr-2025

— Free SPLK-3003 Updates for 90 Days

— 98% Splunk Core Certified Consultant Exam Passing Rate

PDF Only Price 49.99$

19.99$

Buy PDF

Speciality

Additional Information

Testimonials

Related Exams

- Number 1 Splunk Splunk Core Certified Consultant study material online

- Regular SPLK-3003 dumps updates for free.

- Splunk Core Certified Consultant Practice exam questions with their answers and explaination.

- Our commitment to your success continues through your exam with 24/7 support.

- Free SPLK-3003 exam dumps updates for 90 days

- 97% more cost effective than traditional training

- Splunk Core Certified Consultant Practice test to boost your knowledge

- 100% correct Splunk Core Certified Consultant questions answers compiled by senior IT professionals

Splunk SPLK-3003 Braindumps

Realbraindumps.com is providing Splunk Core Certified Consultant SPLK-3003 braindumps which are accurate and of high-quality verified by the team of experts. The Splunk SPLK-3003 dumps are comprised of Splunk Core Certified Consultant questions answers available in printable PDF files and online practice test formats. Our best recommended and an economical package is Splunk Core Certified Consultant PDF file + test engine discount package along with 3 months free updates of SPLK-3003 exam questions. We have compiled Splunk Core Certified Consultant exam dumps question answers pdf file for you so that you can easily prepare for your exam. Our Splunk braindumps will help you in exam. Obtaining valuable professional Splunk Splunk Core Certified Consultant certifications with SPLK-3003 exam questions answers will always be beneficial to IT professionals by enhancing their knowledge and boosting their career.

Yes, really its not as tougher as before. Websites like Realbraindumps.com are playing a significant role to make this possible in this competitive world to pass exams with help of Splunk Core Certified Consultant SPLK-3003 dumps questions. We are here to encourage your ambition and helping you in all possible ways. Our excellent and incomparable Splunk Splunk Core Certified Consultant exam questions answers study material will help you to get through your certification SPLK-3003 exam braindumps in the first attempt.

Pass Exam With Splunk Splunk Core Certified Consultant Dumps. We at Realbraindumps are committed to provide you Splunk Core Certified Consultant braindumps questions answers online. We recommend you to prepare from our study material and boost your knowledge. You can also get discount on our Splunk SPLK-3003 dumps. Just talk with our support representatives and ask for special discount on Splunk Core Certified Consultant exam braindumps. We have latest SPLK-3003 exam dumps having all Splunk Splunk Core Certified Consultant dumps questions written to the highest standards of technical accuracy and can be instantly downloaded and accessed by the candidates when once purchased. Practicing Online Splunk Core Certified Consultant SPLK-3003 braindumps will help you to get wholly prepared and familiar with the real exam condition. Free Splunk Core Certified Consultant exam braindumps demos are available for your satisfaction before purchase order.

Send us mail if you want to check Splunk SPLK-3003 Splunk Core Certified Consultant DEMO before your purchase and our support team will send you in email.

If you don't find your dumps here then you can request what you need and we shall provide it to you.

Bulk Packages

$50

- Get 3 Exams PDF

- Get $33 Discount

- Mention Exam Codes in Payment Description.

Buy 3 Exams PDF

$70

- Get 5 Exams PDF

- Get $65 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF

$100

- Get 5 Exams PDF + Test Engine

- Get $105 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF + Engine

Jessica Doe

Splunk Core Certified Consultant

We are providing Splunk SPLK-3003 Braindumps with practice exam question answers. These will help you to prepare your Splunk Core Certified Consultant exam. Buy Splunk Core Certified Consultant SPLK-3003 dumps and boost your knowledge.

|