Question # 1

| While working on a neural network project, a Machine Learning Specialist discovers thai

some features in the data have very high magnitude resulting in this data being weighted

more in the cost function What should the Specialist do to ensure better convergence

during backpropagation? | | A. Dimensionality reduction

| | B. Data normalization

| | C. Model regulanzation

| | D. Data augmentation for the minority class |

B. Data normalization

Explanation: Data normalization is a data preprocessing technique that scales the

features to a common range, such as [0, 1] or [-1, 1]. This helps reduce the impact of

features with high magnitude on the cost function and improves the convergence during

backpropagation. Data normalization can be done using different methods, such as minmax

scaling, z-score standardization, or unit vector normalization. Data normalization is

different from dimensionality reduction, which reduces the number of features; model

regularization, which adds a penalty term to the cost function to prevent overfitting; and

data augmentation, which increases the amount of data by creating synthetic samples.

Question # 2

A company is building a new version of a recommendation engine. Machine learning (ML)

specialists need to keep adding new data from users to improve personalized

recommendations. The ML specialists gather data from the users’ interactions on the

platform and from sources such as external websites and social media.

The pipeline cleans, transforms, enriches, and compresses terabytes of data daily, and this

data is stored in Amazon S3. A set of Python scripts was coded to do the job and is stored

in a large Amazon EC2 instance. The whole process takes more than 20 hours to finish,

with each script taking at least an hour. The company wants to move the scripts out of

Amazon EC2 into a more managed solution that will eliminate the need to maintain servers.

Which approach will address all of these requirements with the LEAST development effort? | | A. Load the data into an Amazon Redshift cluster. Execute the pipeline by using SQL.

Store the results in Amazon S3.

| | B. Load the data into Amazon DynamoDB. Convert the scripts to an AWS Lambda

function. Execute the pipeline by triggering Lambda executions. Store the results in

Amazon S3.

| | C. Create an AWS Glue job. Convert the scripts to PySpark. Execute the pipeline. Store

the results in Amazon S3.

| | D. Create a set of individual AWS Lambda functions to execute each of the scripts. Build a

step function by using the AWS Step Functions Data Science SDK. Store the results in

Amazon S3. |

C. Create an AWS Glue job. Convert the scripts to PySpark. Execute the pipeline. Store

the results in Amazon S3.

Explanation: The best approach to address all of the requirements with the least

development effort is to create an AWS Glue job, convert the scripts to PySpark, execute

the pipeline, and store the results in Amazon S3. This is because:

-

AWS Glue is a fully managed extract, transform, and load (ETL) service that

makes it easy to prepare and load data for analytics 1. AWS Glue can run Python

and Scala scripts to process data from various sources, such as Amazon S3,

Amazon DynamoDB, Amazon Redshift, and more 2. AWS Glue also provides a

serverless Apache Spark environment to run ETL jobs, eliminating the need to

provision and manage servers 3.

-

PySpark is the Python API for Apache Spark, a unified analytics engine for largescale

data processing 4. PySpark can perform various data transformations and

manipulations on structured and unstructured data, such as cleaning, enriching,

and compressing 5. PySpark can also leverage the distributed computing power of

Spark to handle terabytes of data efficiently and scalably 6.

-

By creating an AWS Glue job and converting the scripts to PySpark, the company

can move the scripts out of Amazon EC2 into a more managed solution that will

eliminate the need to maintain servers. The company can also reduce the

development effort by using the AWS Glue console, AWS SDK, or AWS CLI to

create and run the job 7. Moreover, the company can use the AWS Glue Data

Catalog to store and manage the metadata of the data sources and targets 8.

The other options are not as suitable as option C for the following reasons:

-

Option A is not optimal because loading the data into an Amazon Redshift cluster

and executing the pipeline by using SQL will incur additional costs and complexity

for the company. Amazon Redshift is a fully managed data warehouse service that

enables fast and scalable analysis of structured data . However, it is not designed

for ETL purposes, such as cleaning, transforming, enriching, and compressing

data. Moreover, using SQL to perform these tasks may not be as expressive and

flexible as using Python scripts. Furthermore, the company will have to provision

and configure the Amazon Redshift cluster, and load and unload the data from

Amazon S3, which will increase the development effort and time.

-

Option B is not feasible because loading the data into Amazon DynamoDB and

converting the scripts to an AWS Lambda function will not work for the company’s

use case. Amazon DynamoDB is a fully managed key-value and document

database service that provides fast and consistent performance at any scale .

However, it is not suitable for storing and processing terabytes of data daily, as it

has limits on the size and throughput of each table and item . Moreover, using

AWS Lambda to execute the pipeline will not be efficient or cost-effective, as

Lambda has limits on the memory, CPU, and execution time of each function .

Therefore, using Amazon DynamoDB and AWS Lambda will not meet the

company’s requirements for processing large amounts of data quickly and reliably.

-

Option D is not relevant because creating a set of individual AWS Lambda

functions to execute each of the scripts and building a step function by using the

AWS Step Functions Data Science SDK will not address the main issue of moving

the scripts out of Amazon EC2. AWS Step Functions is a fully managed service

that lets you coordinate multiple AWS services into serverless workflows . The

AWS Step Functions Data Science SDK is an open source library that allows data

scientists to easily create workflows that process and publish machine learning

models using Amazon SageMaker and AWS Step Functions . However, these

services and tools are not designed for ETL purposes, such as cleaning,

transforming, enriching, and compressing data. Moreover, as mentioned in option

B, using AWS Lambda to execute the scripts will not be efficient or cost-effective

for the company’s use case.

Question # 3

A retail company uses a machine learning (ML) model for daily sales forecasting. The

company’s brand manager reports that the model has provided inaccurate results for the

past 3 weeks.

At the end of each day, an AWS Glue job consolidates the input data that is used for the

forecasting with the actual daily sales data and the predictions of the model. The AWS

Glue job stores the data in Amazon S3. The company’s ML team is using an Amazon

SageMaker Studio notebook to gain an understanding about the source of the model's

inaccuracies.

What should the ML team do on the SageMaker Studio notebook to visualize the model's

degradation MOST accurately? | | A. Create a histogram of the daily sales over the last 3 weeks. In addition, create a

histogram of the daily sales from before that period.

| | B. Create a histogram of the model errors over the last 3 weeks. In addition, create a

histogram of the model errors from before that period.

| | C. Create a line chart with the weekly mean absolute error (MAE) of the model.

| | D. Create a scatter plot of daily sales versus model error for the last 3 weeks. In addition,

create a scatter plot of daily sales versus model error from before that period. |

B. Create a histogram of the model errors over the last 3 weeks. In addition, create a

histogram of the model errors from before that period.

Explanation: The best way to visualize the model’s degradation is to create a histogram of

the model errors over the last 3 weeks and compare it with a histogram of the model errors

from before that period. A histogram is a graphical representation of the distribution of

numerical data. It shows how often each value or range of values occurs in the data. A

model error is the difference between the actual value and the predicted value. A high model error indicates a poor fit of the model to the data. By comparing the histograms of

the model errors, the ML team can see if there is a significant change in the shape, spread,

or center of the distribution. This can indicate if the model is underfitting, overfitting, or

drifting from the data. A line chart or a scatter plot would not be as effective as a histogram

for this purpose, because they do not show the distribution of the errors. A line chart would

only show the trend of the errors over time, which may not capture the variability or outliers.

A scatter plot would only show the relationship between the errors and another variable,

such as daily sales, which may not be relevant or informative for the model’s performance.

Question # 4

| A Machine Learning Specialist is preparing data for training on Amazon SageMaker The

Specialist is transformed into a numpy .array, which appears to be negatively affecting the

speed of the training

What should the Specialist do to optimize the data for training on SageMaker'? | | A. Use the SageMaker batch transform feature to transform the training data into a

DataFrame

| | B. Use AWS Glue to compress the data into the Apache Parquet format

| | C. Transform the dataset into the Recordio protobuf format

| | D. Use the SageMaker hyperparameter optimization feature to automatically optimize the

data |

C. Transform the dataset into the Recordio protobuf format

Explanation: The Recordio protobuf format is a binary data format that is optimized for

training on SageMaker. It allows faster data loading and lower memory usage compared to

other formats such as CSV or numpy arrays. The Recordio protobuf format also supports

features such as sparse input, variable-length input, and label embedding. To use the

Recordio protobuf format, the data needs to be serialized and deserialized using the

appropriate libraries. Some of the built-in algorithms in SageMaker support the Recordio

protobuf format as a content type for training and inference.

Question # 5

A company plans to build a custom natural language processing (NLP) model to classify

and prioritize user feedback. The company hosts the data and all machine learning (ML)

infrastructure in the AWS Cloud. The ML team works from the company's office, which has

an IPsec VPN connection to one VPC in the AWS Cloud.

The company has set both the enableDnsHostnames attribute and the enableDnsSupport

attribute of the VPC to true. The company's DNS resolvers point to the VPC DNS. The

company does not allow the ML team to access Amazon SageMaker notebooks through

connections that use the public internet. The connection must stay within a private network

and within the AWS internal network.

Which solution will meet these requirements with the LEAST development effort? | | A. Create a VPC interface endpoint for the SageMaker notebook in the VPC. Access the

notebook through a VPN connection and the VPC endpoint.

| | B. Create a bastion host by using Amazon EC2 in a public subnet within the VPC. Log in to

the bastion host through a VPN connection. Access the SageMaker notebook from the

bastion host.

| | C. Create a bastion host by using Amazon EC2 in a private subnet within the VPC with a

NAT gateway. Log in to the bastion host through a VPN connection. Access the

SageMaker notebook from the bastion host.

| | D. Create a NAT gateway in the VPC. Access the SageMaker notebook HTTPS endpoint

through a VPN connection and the NAT gateway. |

A. Create a VPC interface endpoint for the SageMaker notebook in the VPC. Access the

notebook through a VPN connection and the VPC endpoint.

Explanation: In this scenario, the company requires that access to the Amazon

SageMaker notebook remain within the AWS internal network, avoiding the public internet.

By creating a VPC interface endpoint for SageMaker, the company can ensure that traffic

to the SageMaker notebook remains internal to the VPC and is accessible over a private

connection. The VPC interface endpoint allows private network access to AWS services,

and it operates over AWS’s internal network, respecting the security and connectivity

policies the company requires.

This solution requires minimal development effort compared to options involving bastion

hosts or NAT gateways, as it directly provides private network access to the SageMaker

notebook.

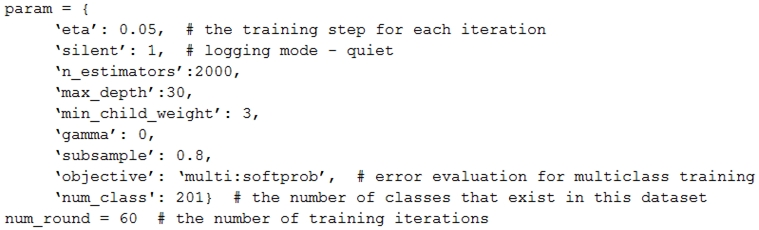

Question # 6

A Machine Learning Specialist is assigned to a Fraud Detection team and must tune an

XGBoost model, which is working appropriately for test data. However, with unknown data,

it is not working as expected. The existing parameters are provided as follows.

Which parameter tuning guidelines should the Specialist follow to avoid overfitting? | | A. Increase the max_depth parameter value. | | B. Lower the max_depth parameter value.

| | C. Update the objective to binary:logistic.

| | D. Lower the min_child_weight parameter value. |

B. Lower the max_depth parameter value.

Explanation: Overfitting occurs when a model performs well on the training data but poorly

on the test data. This is often because the model has learned the training data too well and

is not able to generalize to new data. To avoid overfitting, the Machine Learning Specialist

should lower the max_depth parameter value. This will reduce the complexity of the model

and make it less likely to overfit. According to the XGBoost documentation1, the

max_depth parameter controls the maximum depth of a tree and lower values can help

prevent overfitting. The documentation also suggests other ways to control overfitting, such

as adding randomness, using regularization, and using early stopping1.

Question # 7

A Marketing Manager at a pet insurance company plans to launch a targeted marketing

campaign on social media to acquire new customers Currently, the company has the

following data in Amazon Aurora

• Profiles for all past and existing customers

• Profiles for all past and existing insured pets

• Policy-level information

• Premiums received

• Claims paid

What steps should be taken to implement a machine learning model to identify potential

new customers on social media? | | A. Use regression on customer profile data to understand key characteristics of consumer

segments Find similar profiles on social media.

| | B. Use clustering on customer profile data to understand key characteristics of consumer

segments Find similar profiles on social media.

| | C. Use a recommendation engine on customer profile data to understand key

characteristics of consumer segments. Find similar profiles on social media

| | D. Use a decision tree classifier engine on customer profile data to understand key

characteristics of consumer segments. Find similar profiles on social media |

B. Use clustering on customer profile data to understand key characteristics of consumer

segments Find similar profiles on social media.

Explanation: Clustering is a machine learning technique that can group data points into

clusters based on their similarity or proximity. Clustering can help discover the underlying

structure and patterns in the data, as well as identify outliers or anomalies. Clustering can

also be used for customer segmentation, which is the process of dividing customers into

groups based on their characteristics, behaviors, preferences, or needs. Customer

segmentation can help understand the key features and needs of different customer

segments, as well as design and implement targeted marketing campaigns for each

segment. In this case, the Marketing Manager at a pet insurance company plans to launch

a targeted marketing campaign on social media to acquire new customers. To do this, the

Manager can use clustering on customer profile data to understand the key characteristics

of consumer segments, such as their demographics, pet types, policy preferences,

premiums paid, claims made, etc. The Manager can then find similar profiles on social

media, such as Facebook, Twitter, Instagram, etc., by using the cluster features as filters or

keywords. The Manager can then target these potential new customers with personalized

and relevant ads or offers that match their segment’s needs and interests. This way, the

Manager can implement a machine learning model to identify potential new customers on

social media.

Amazon Web Services MLS-C01 Exam Dumps

5 out of 5

Pass Your AWS Certified Machine Learning - Specialty Exam in First Attempt With MLS-C01 Exam Dumps. Real AWS Certified Specialty Exam Questions As in Actual Exam!

— 307 Questions With Valid Answers

— Updation Date : 15-Apr-2025

— Free MLS-C01 Updates for 90 Days

— 98% AWS Certified Machine Learning - Specialty Exam Passing Rate

PDF Only Price 49.99$

19.99$

Buy PDF

Speciality

Additional Information

Testimonials

Related Exams

- Number 1 Amazon Web Services AWS Certified Specialty study material online

- Regular MLS-C01 dumps updates for free.

- AWS Certified Machine Learning - Specialty Practice exam questions with their answers and explaination.

- Our commitment to your success continues through your exam with 24/7 support.

- Free MLS-C01 exam dumps updates for 90 days

- 97% more cost effective than traditional training

- AWS Certified Machine Learning - Specialty Practice test to boost your knowledge

- 100% correct AWS Certified Specialty questions answers compiled by senior IT professionals

Amazon Web Services MLS-C01 Braindumps

Realbraindumps.com is providing AWS Certified Specialty MLS-C01 braindumps which are accurate and of high-quality verified by the team of experts. The Amazon Web Services MLS-C01 dumps are comprised of AWS Certified Machine Learning - Specialty questions answers available in printable PDF files and online practice test formats. Our best recommended and an economical package is AWS Certified Specialty PDF file + test engine discount package along with 3 months free updates of MLS-C01 exam questions. We have compiled AWS Certified Specialty exam dumps question answers pdf file for you so that you can easily prepare for your exam. Our Amazon Web Services braindumps will help you in exam. Obtaining valuable professional Amazon Web Services AWS Certified Specialty certifications with MLS-C01 exam questions answers will always be beneficial to IT professionals by enhancing their knowledge and boosting their career.

Yes, really its not as tougher as before. Websites like Realbraindumps.com are playing a significant role to make this possible in this competitive world to pass exams with help of AWS Certified Specialty MLS-C01 dumps questions. We are here to encourage your ambition and helping you in all possible ways. Our excellent and incomparable Amazon Web Services AWS Certified Machine Learning - Specialty exam questions answers study material will help you to get through your certification MLS-C01 exam braindumps in the first attempt.

Pass Exam With Amazon Web Services AWS Certified Specialty Dumps. We at Realbraindumps are committed to provide you AWS Certified Machine Learning - Specialty braindumps questions answers online. We recommend you to prepare from our study material and boost your knowledge. You can also get discount on our Amazon Web Services MLS-C01 dumps. Just talk with our support representatives and ask for special discount on AWS Certified Specialty exam braindumps. We have latest MLS-C01 exam dumps having all Amazon Web Services AWS Certified Machine Learning - Specialty dumps questions written to the highest standards of technical accuracy and can be instantly downloaded and accessed by the candidates when once purchased. Practicing Online AWS Certified Specialty MLS-C01 braindumps will help you to get wholly prepared and familiar with the real exam condition. Free AWS Certified Specialty exam braindumps demos are available for your satisfaction before purchase order.

Send us mail if you want to check Amazon Web Services MLS-C01 AWS Certified Machine Learning - Specialty DEMO before your purchase and our support team will send you in email.

If you don't find your dumps here then you can request what you need and we shall provide it to you.

Bulk Packages

$50

- Get 3 Exams PDF

- Get $33 Discount

- Mention Exam Codes in Payment Description.

Buy 3 Exams PDF

$70

- Get 5 Exams PDF

- Get $65 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF

$100

- Get 5 Exams PDF + Test Engine

- Get $105 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF + Engine

Jessica Doe

AWS Certified Specialty

We are providing Amazon Web Services MLS-C01 Braindumps with practice exam question answers. These will help you to prepare your AWS Certified Machine Learning - Specialty exam. Buy AWS Certified Specialty MLS-C01 dumps and boost your knowledge.

|