Question # 1

A company has deployed an XGBoost prediction model in production to predict if a

customer is likely to cancel a subscription. The company uses Amazon SageMaker Model

Monitor to detect deviations in the F1 score.

During a baseline analysis of model quality, the company recorded a threshold for the F1

score. After several months of no change, the model's F1 score decreases significantly.

What could be the reason for the reduced F1 score? | | A. Concept drift occurred in the underlying customer data that was used for predictions. | | B. The model was not sufficiently complex to capture all the patterns in the original

baseline data. | | C. The original baseline data had a data quality issue of missing values. | | D. Incorrect ground truth labels were provided to Model Monitor during the calculation of

the baseline. |

A. Concept drift occurred in the underlying customer data that was used for predictions.

Explanation:

Problem Description:

Why Concept Drift?

Signs of Concept Drift:

Solution:

Why Not Other Options?:

Conclusion: The decrease in F1 score is most likely due toconcept driftin the customer

data, requiring retraining of the model with new data.

Question # 2

A company has developed a new ML model. The company requires online model validation

on 10% of the traffic before the company fully releases the model in production. The

company uses an Amazon SageMaker endpoint behind an Application Load Balancer

(ALB) to serve the model.

Which solution will set up the required online validation with the LEAST operational

overhead? | | A. Use production variants to add the new model to the existing SageMaker endpoint. Set

the variant weight to 0.1 for the new model. Monitor the number of invocations by using

Amazon CloudWatch. | | B. Use production variants to add the new model to the existing SageMaker endpoint. Set

the variant weight to 1 for the new model. Monitor the number of invocations by using

Amazon CloudWatch. | | C. Create a new SageMaker endpoint. Use production variants to add the new model to the

new endpoint. Monitor the number of invocations by using Amazon CloudWatch. | | D. Configure the ALB to route 10% of the traffic to the new model at the existing

SageMaker endpoint. Monitor the number of invocations by using AWS CloudTrail. |

A. Use production variants to add the new model to the existing SageMaker endpoint. Set

the variant weight to 0.1 for the new model. Monitor the number of invocations by using

Amazon CloudWatch.

Explanation:

Scenario:The company wants to perform online validation of a new ML

model on 10% of the traffic before fully deploying the model in production. The setup must

have minimal operational overhead.

Why Use SageMaker Production Variants?

Built-In Traffic Splitting:Amazon SageMaker endpoints support production variants,

allowing multiple models to run on a single endpoint. You can direct a percentage

of incoming traffic to each variant by adjusting the variant weights.

Ease of Management:Using production variants eliminates the need for additional

infrastructure like separate endpoints or custom ALB configurations.

Monitoring with CloudWatch:SageMaker automatically integrates with

CloudWatch, enabling real-time monitoring of model performance and invocation

metrics.

Steps to Implement:

Deploy the New Model as a Production Variant:

Example SDK Code:

import boto3

sm_client = boto3.client('sagemaker')

response = sm_client.update_endpoint_weights_and_capacities(

EndpointName='existing-endpoint-name',

DesiredWeightsAndCapacities=[

{'VariantName': 'current-model', 'DesiredWeight': 0.9},

{'VariantName': 'new-model', 'DesiredWeight': 0.1}

]

)

Set the Variant Weight:

Monitor the Performance:

Validate the Results:

Why Not the Other Options?

Option B:Setting the weight to 1 directs all traffic to the new model, which does not

meet the requirement of splitting traffic for validation.

Option C:Creating a new endpoint introduces additional operational overhead for

traffic routing and monitoring, which is unnecessary given SageMaker's built-in

production variant capability.

Option D:Configuring the ALB to route traffic requires manual setup and lacks

SageMaker's seamless variant monitoring and traffic splitting features.

Conclusion:Using production variants with a weight of 0.1 for the new model on the

existing SageMaker endpoint provides the required traffic split for online validation with

minimal operational overhead.

Question # 3

A company wants to improve the sustainability of its ML operations.

Which actions will reduce the energy usage and computational resources that are

associated with the company's training jobs? (Choose two.) | | A. Use Amazon SageMaker Debugger to stop training jobs when non-converging

conditions are detected. | | B. Use Amazon SageMaker Ground Truth for data labeling. | | C. Deploy models by using AWS Lambda functions. | | D. Use AWS Trainium instances for training. | | E. Use PyTorch or TensorFlow with the distributed training option. |

A. Use Amazon SageMaker Debugger to stop training jobs when non-converging

conditions are detected.

D. Use AWS Trainium instances for training.

Explanation:

SageMaker Debuggercan identify when a training job is not converging or is stuck in a nonproductive

state. By stopping these jobs early, unnecessary energy and computational

resources are conserved, improving sustainability.

AWS Trainiuminstances are purpose-built for ML training and are optimized for energy

efficiency and cost-effectiveness. They use less energy per training task compared to

general-purpose instances, making them a sustainable choice.

Question # 4

A company is using an Amazon Redshift database as its single data source. Some of the

data is sensitive.

A data scientist needs to use some of the sensitive data from the database. An ML

engineer must give the data scientist access to the data without transforming the source

data and without storing anonymized data in the database.

Which solution will meet these requirements with the LEAST implementation effort? | | A. Configure dynamic data masking policies to control how sensitive data is shared with the

data scientist at query time. | | B. Create a materialized view with masking logic on top of the database. Grant the

necessary read permissions to the data scientist. | | C. Unload the Amazon Redshift data to Amazon S3. Use Amazon Athena to create

schema-on-read with masking logic. Share the view with the data scientist. | | D. Unload the Amazon Redshift data to Amazon S3. Create an AWS Glue job to

anonymize the data. Share the dataset with the data scientist. |

A. Configure dynamic data masking policies to control how sensitive data is shared with the

data scientist at query time.

Explanation:

Dynamic data masking allows you to control how sensitive data is presented to users at

query time, without modifying or storing transformed versions of the source data. Amazon

Redshift supports dynamic data masking, which can be implemented with minimal effort.

This solution ensures that the data scientist can access the required information while

sensitive data remains protected, meeting the requirements efficiently and with the least

implementation effort.

Question # 5

A company wants to reduce the cost of its containerized ML applications. The applications

use ML models that run on Amazon EC2 instances, AWS Lambda functions, and an

Amazon Elastic Container Service (Amazon ECS) cluster. The EC2 workloads and ECS

workloads use Amazon Elastic Block Store (Amazon EBS) volumes to save predictions and

artifacts.

An ML engineer must identify resources that are being used inefficiently. The ML engineer

also must generate recommendations to reduce the cost of these resources.

Which solution will meet these requirements with the LEAST development effort? | | A. Create code to evaluate each instance's memory and compute usage. | | B. Add cost allocation tags to the resources. Activate the tags in AWS Billing and Cost

Management. | | C. Check AWS CloudTrail event history for the creation of the resources. | | D. Run AWS Compute Optimizer. |

D. Run AWS Compute Optimizer.

Explanation:

AWS Compute Optimizer analyzes the resource usage of Amazon EC2 instances, ECS

services, Lambda functions, and Amazon EBS volumes. It provides actionable

recommendations to optimize resource utilization and reduce costs, such as resizing

instances, moving workloads to Spot Instances, or changing volume types. This solution

requires the least development effort because Compute Optimizer is a managed service

that automatically generates insights and recommendations based on historical usage

data.

Question # 6

An ML engineer needs to use data with Amazon SageMaker Canvas to train an ML model.

The data is stored in Amazon S3 and is complex in structure. The ML engineer must use a

file format that minimizes processing time for the data.

Which file format will meet these requirements? | | A. CSV files compressed with Snappy | | B. JSON objects in JSONL format | | C. JSON files compressed with gzip | | D. Apache Parquet files |

D. Apache Parquet files

Explanation:

Apache Parquet is a columnar storage file format optimized for complex and large

datasets. It provides efficient reading and processing by accessing only the required

columns, which reduces I/O and speeds up data handling. This makes it ideal for use with

Amazon SageMaker Canvas, where minimizing processing time is important for training ML

models. Parquet is also compatible with S3 and widely supported in data analytics and ML

workflows.

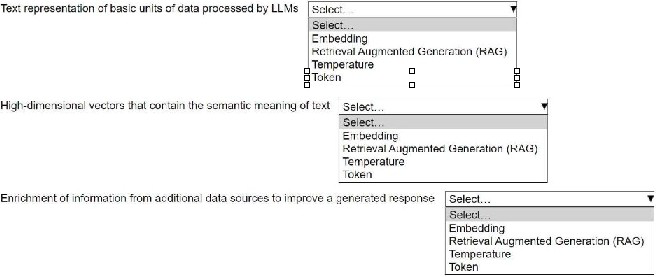

Question # 7

An ML engineer is building a generative AI application on Amazon Bedrock by using large

language models (LLMs).

Select the correct generative AI term from the following list for each description. Each term

should be selected one time or not at all. (Select three.)

• Embedding

• Retrieval Augmented Generation (RAG)

• Temperature

• Token

|

Amazon Web Services MLA-C01 Exam Dumps

5 out of 5

Pass Your AWS Certified Machine Learning Engineer-Associate Exam in First Attempt With MLA-C01 Exam Dumps. Real AWS Certified Associate Exam Questions As in Actual Exam!

— 85 Questions With Valid Answers

— Updation Date : 28-Mar-2025

— Free MLA-C01 Updates for 90 Days

— 98% AWS Certified Machine Learning Engineer-Associate Exam Passing Rate

PDF Only Price 49.99$

19.99$

Buy PDF

Speciality

Additional Information

Testimonials

Related Exams

- Number 1 Amazon Web Services AWS Certified Associate study material online

- Regular MLA-C01 dumps updates for free.

- AWS Certified Machine Learning Engineer-Associate Practice exam questions with their answers and explaination.

- Our commitment to your success continues through your exam with 24/7 support.

- Free MLA-C01 exam dumps updates for 90 days

- 97% more cost effective than traditional training

- AWS Certified Machine Learning Engineer-Associate Practice test to boost your knowledge

- 100% correct AWS Certified Associate questions answers compiled by senior IT professionals

Amazon Web Services MLA-C01 Braindumps

Realbraindumps.com is providing AWS Certified Associate MLA-C01 braindumps which are accurate and of high-quality verified by the team of experts. The Amazon Web Services MLA-C01 dumps are comprised of AWS Certified Machine Learning Engineer-Associate questions answers available in printable PDF files and online practice test formats. Our best recommended and an economical package is AWS Certified Associate PDF file + test engine discount package along with 3 months free updates of MLA-C01 exam questions. We have compiled AWS Certified Associate exam dumps question answers pdf file for you so that you can easily prepare for your exam. Our Amazon Web Services braindumps will help you in exam. Obtaining valuable professional Amazon Web Services AWS Certified Associate certifications with MLA-C01 exam questions answers will always be beneficial to IT professionals by enhancing their knowledge and boosting their career.

Yes, really its not as tougher as before. Websites like Realbraindumps.com are playing a significant role to make this possible in this competitive world to pass exams with help of AWS Certified Associate MLA-C01 dumps questions. We are here to encourage your ambition and helping you in all possible ways. Our excellent and incomparable Amazon Web Services AWS Certified Machine Learning Engineer-Associate exam questions answers study material will help you to get through your certification MLA-C01 exam braindumps in the first attempt.

Pass Exam With Amazon Web Services AWS Certified Associate Dumps. We at Realbraindumps are committed to provide you AWS Certified Machine Learning Engineer-Associate braindumps questions answers online. We recommend you to prepare from our study material and boost your knowledge. You can also get discount on our Amazon Web Services MLA-C01 dumps. Just talk with our support representatives and ask for special discount on AWS Certified Associate exam braindumps. We have latest MLA-C01 exam dumps having all Amazon Web Services AWS Certified Machine Learning Engineer-Associate dumps questions written to the highest standards of technical accuracy and can be instantly downloaded and accessed by the candidates when once purchased. Practicing Online AWS Certified Associate MLA-C01 braindumps will help you to get wholly prepared and familiar with the real exam condition. Free AWS Certified Associate exam braindumps demos are available for your satisfaction before purchase order.

Send us mail if you want to check Amazon Web Services MLA-C01 AWS Certified Machine Learning Engineer-Associate DEMO before your purchase and our support team will send you in email.

If you don't find your dumps here then you can request what you need and we shall provide it to you.

Bulk Packages

$50

- Get 3 Exams PDF

- Get $33 Discount

- Mention Exam Codes in Payment Description.

Buy 3 Exams PDF

$70

- Get 5 Exams PDF

- Get $65 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF

$100

- Get 5 Exams PDF + Test Engine

- Get $105 Discount

- Mention Exam Codes in Payment Description.

Buy 5 Exams PDF + Engine

Jessica Doe

AWS Certified Associate

We are providing Amazon Web Services MLA-C01 Braindumps with practice exam question answers. These will help you to prepare your AWS Certified Machine Learning Engineer-Associate exam. Buy AWS Certified Associate MLA-C01 dumps and boost your knowledge.

|